AMD vs. Nvidia: AI Chip Race Heats Up with Instinct MI300 Memory Boost

Share IT

Launch Your Dream Website with Us!

Click Here to Get in touch with Us.

Categories

AMD Instinct MI300 Memory Boost

AMD Readys a Memory Boost for its Instinct MI300 as the AI Race Heats Up

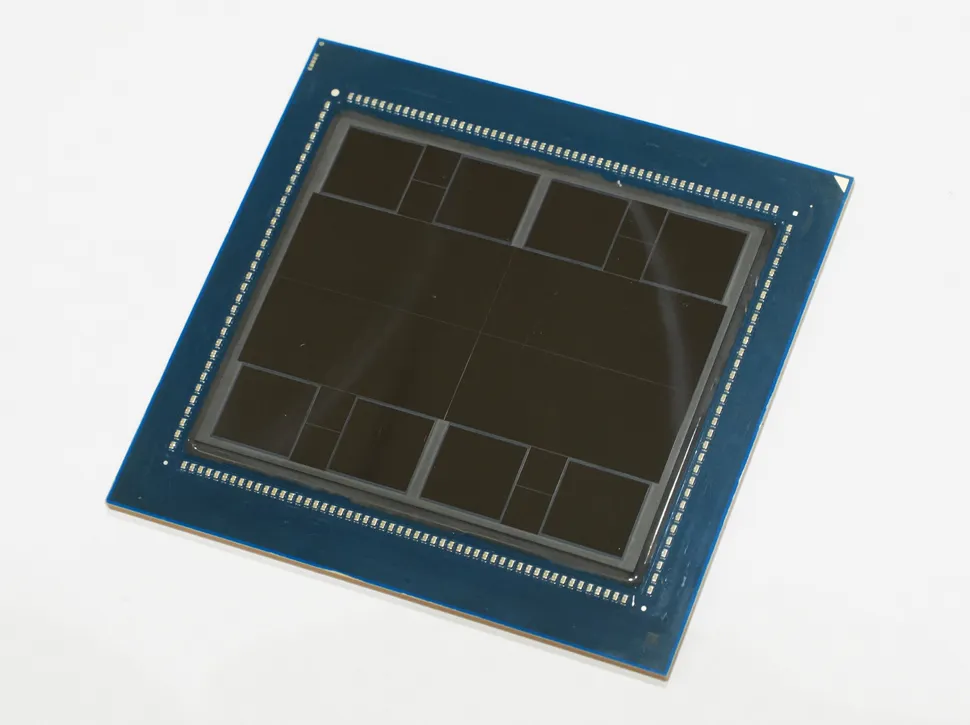

As Advanced Micro Devices (AMD) prepares its flagship AI accelerator, the Instinct MI300, for a memory upgrade, the competition for market dominance in AI chips heats up. In an effort to provide researchers and developers with even more processing power and memory capacity to tackle challenging AI applications, this calculated move is a reaction to Nvidia’s intense competition.

Breaking Down the Memory Boost

AMD Instinct MI300 Memory Boost

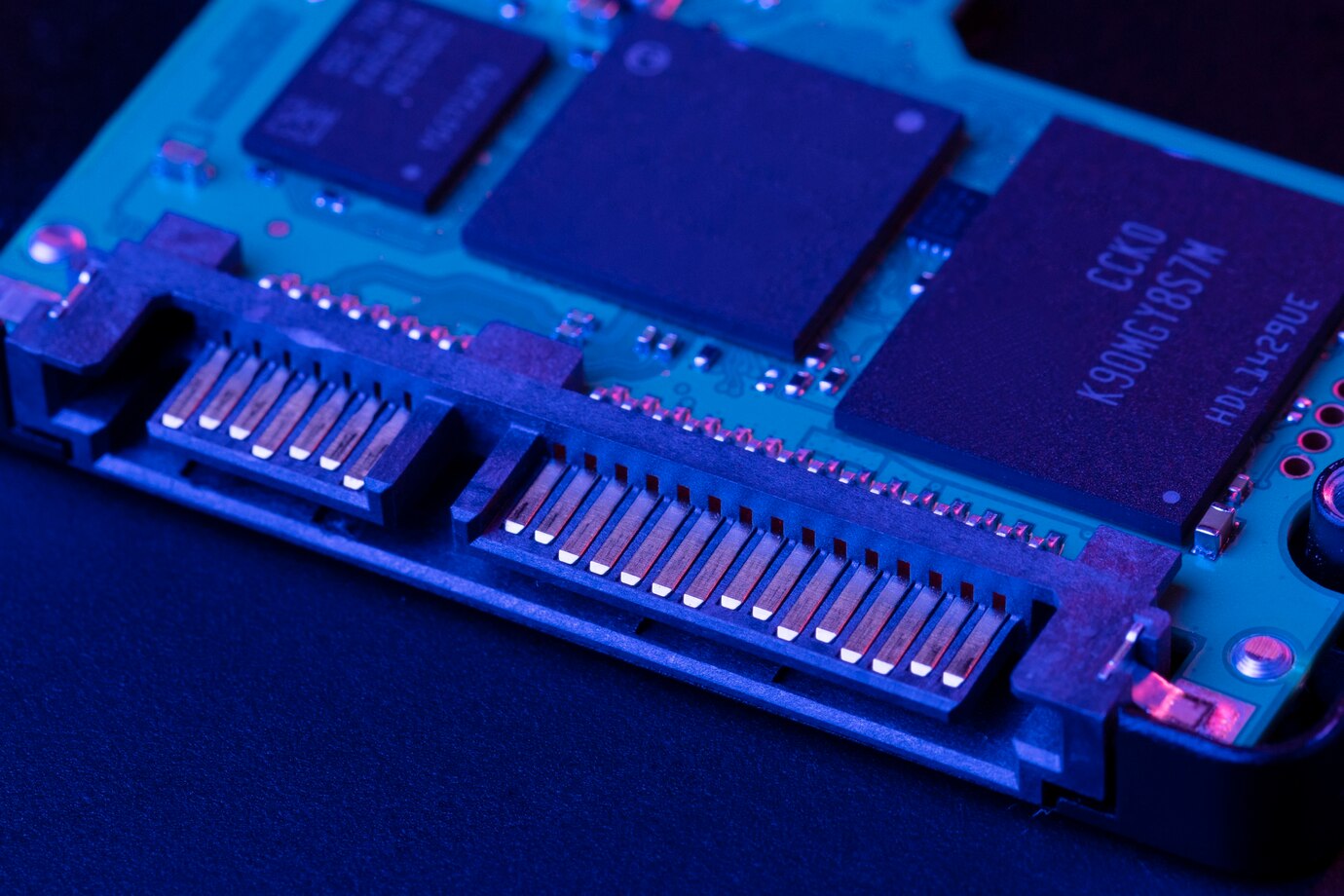

As of right now, the Instinct MI300 has two memory setups:

MI300A: 128GB of high-bandwidth memory (HBM3) is included in this version.

MI300X: With a greater capacity of 192GB HBM3, this high-performance model is available.

AMD does, however, have plans to introduce more memory options in the future, maybe with even larger capacities. This strategic choice is the result of multiple important factors:

- The Increasing Need for AI Capabilities: Larger memory capacity are needed by AI models as they develop and become more complicated in order to handle the enormous datasets that are used for training and making conclusions.

- Defying Nvidia’s Challenge: The H100, Nvidia’s leading competitor in the AI chip market, has 80GB of HBM3 memory as of right now. AMD hopes to obtain a competitive edge by providing greater memory options.

- Realizing Potential Memory: expansion may result in improved performance for some AI tasks, especially those that include huge language models and scientific simulations that need a lot of data manipulation.

Table of Contents

Beyond the Memory Boost: An All-encompassing Method

AMD Instinct MI300 Memory Boost

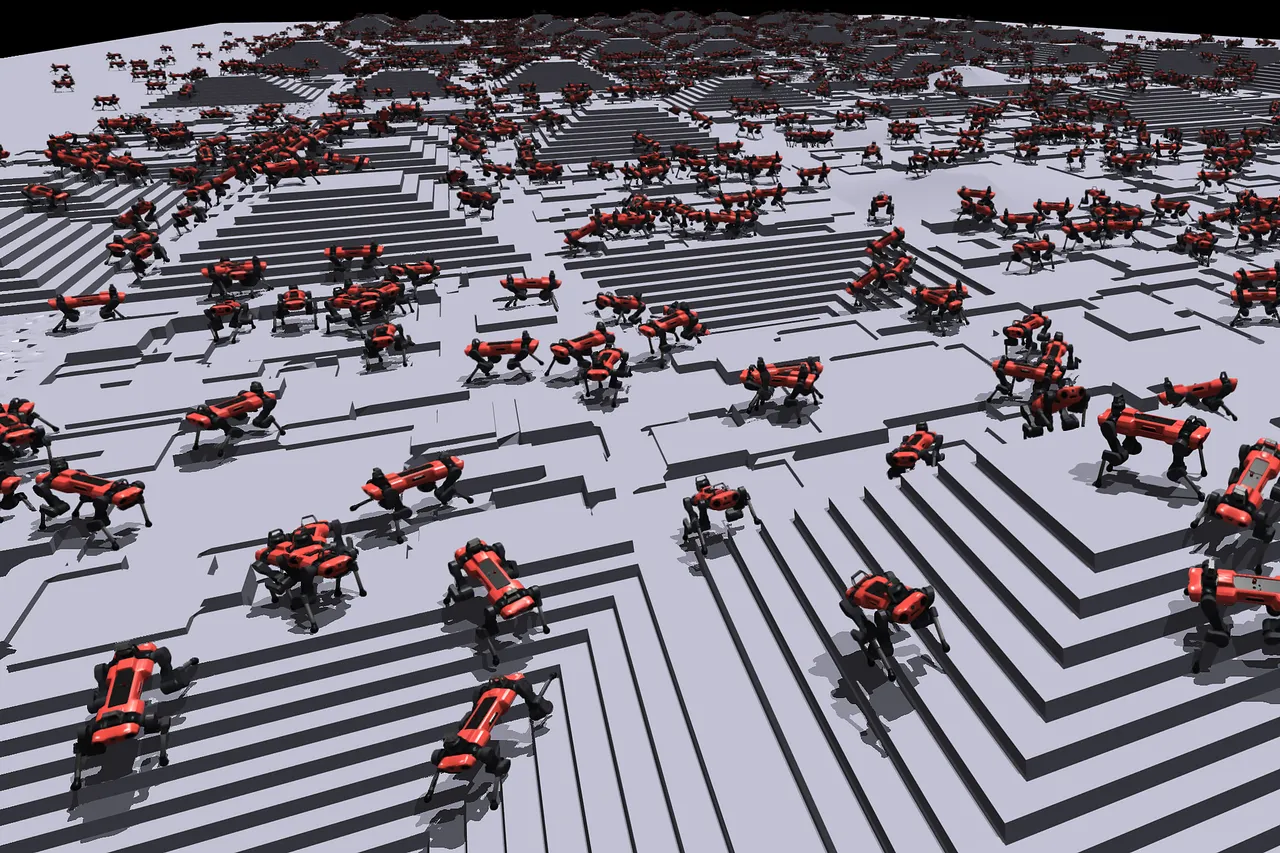

Even if the RAM increase is an important advancement, it’s important to understand that it’s only one aspect of the situation. An AI chip’s total performance is determined by a wider range of criteria, such as:

- Chip Architecture: The processing power and efficiency of a chip are largely determined by its basic design.

- Software Optimization: The chip’s speed and user experience can be greatly impacted by the software tools and libraries that are used with it.

- Particular Workload Needs: Various AI applications have different processing and memory requirements. In the end, the “best” chip will rely on the particular use case and the type of AI workload that it must manage.

The Path Ahead: A Market Place in Competition

AMD Instinct MI300 Memory Boost

AMD has demonstrated their constant dedication to staying at the forefront of the fast evolving AI chip market with their decision to broaden the memory options for the Instinct MI300. The future of AI computing will probably be impacted by this calculated decision in addition to their continuous improvements in chip architecture and software optimization. AMD is providing developers and researchers with new options and capabilities, giving them more powerful tools to push the frontiers of AI innovation and discovery.

AMD Instinct MI300 Memory Boost

The field of artificial intelligence is always changing, and in the upcoming years, AMD and Nvidia’s rivalry is probably going to get much more intense. With continuing progress in AI hardware promised by this competitive drive, even more potent and adaptable AI chips with the potential to transform a multitude of industries and open up new avenues for exploration will eventually be developed.

Launch Your Dream Website with Us!

Click Here to Get in touch with Us.

Recent Comments